A team of imaging processing experts from the Universitat Pompeu Fabra in Barcelona have recently developed a technique that identifies a player’s body orientation on the field within a time series simply by using video feeds of a match of football. Adrià Arbués-Sangüesa, Gloria Haro, Coloma Ballester and Adrián Martín (2019) leveraged computer vision and deep learning techniques to develop three vector probabilities that, when combined, estimated the orientation of a player’s upper-torso using his shoulder and hips positioning, field view and ball position.

This group of researchers argue that due to the evolution of football orientation has become increasingly important to adapt to the increasing pace of the game. Previously, players often benefited from sufficient time on the ball to control, look up and pass. Now, a player needs to orientate their body prior to controlling the ball in order to reduce the time it takes him to perform the next pass. Adrià and his team defined orientation as the direction in which the upper body is facing, derived by the area edging from the two shoulders and the two hips. Due to their dynamic and independent movement, legs, arms and face were excluded from this definition.

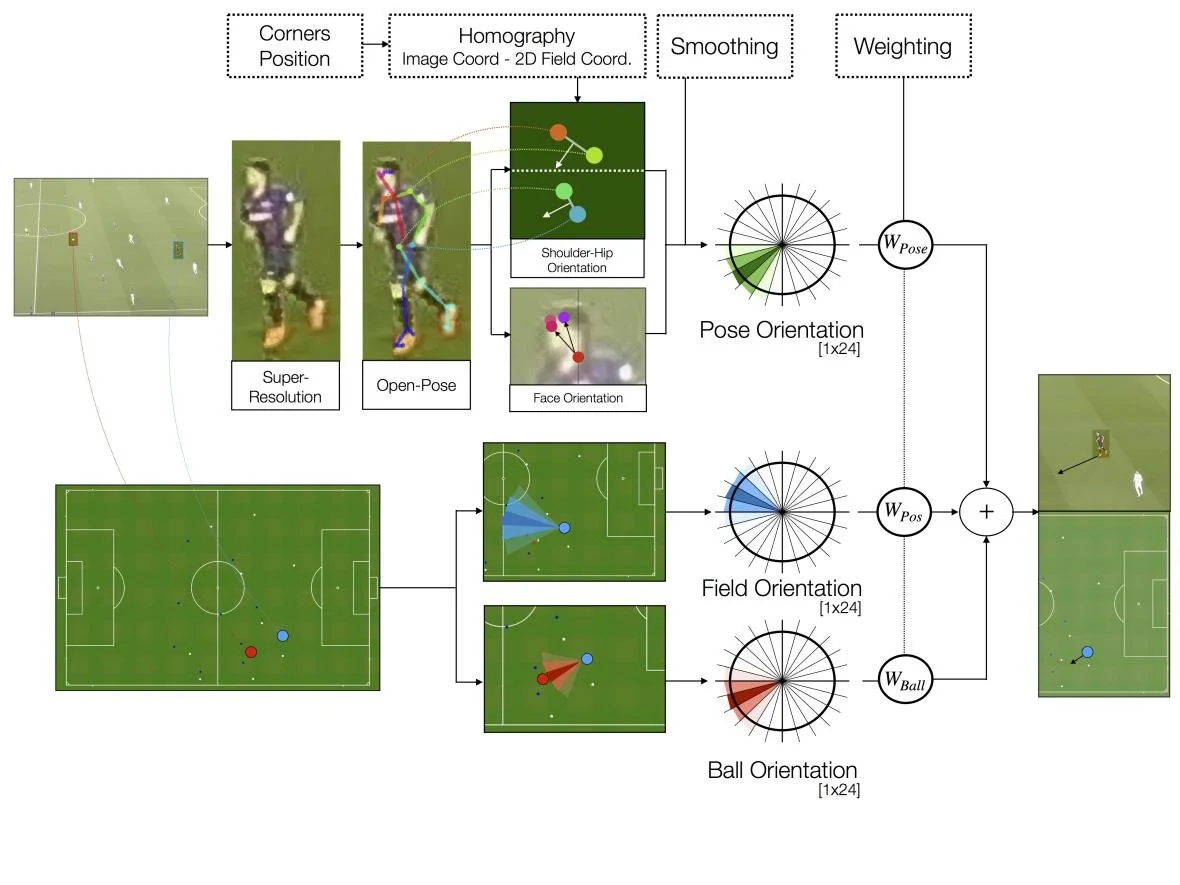

To produce this orientation estimate, they first calculated different estimates of orientation based on three different factors: pose orientation (using OpenPose and super-resolution for image enhancing), field orientation (the field view of a player relative to their position on the field) and ball position (effect of ball position on orientation of a player). These three estimates were combined together by applying different weightings and produce the final overall body orientation of a player.

1. Body Orientation Calculated From Pose

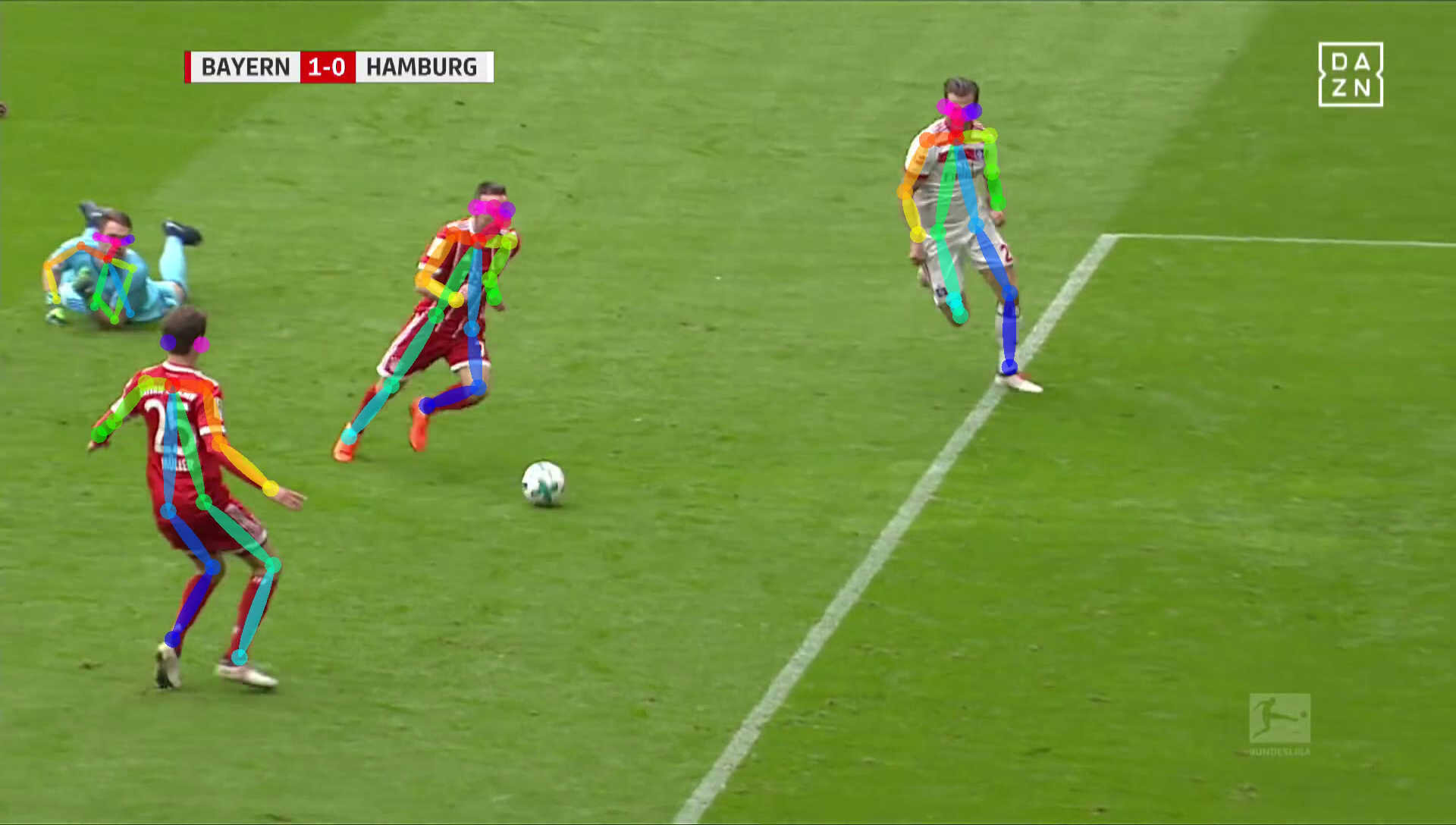

The researchers used the open source library of OpenPose. This library allows you to input a frame and retrieve a human skeleton drawn over an image of a person within that frame. It can detect up to 25 body parts per person, such as elbows, shoulders and knees, and specify the level of confidence in identifying such parts. It can also provide additional data points such as heat maps and directions.

However, unlike in a closeup video of a person, in sports events like a match of football players can appear in very small portions of the frame, even in full HD frames like broadcasting frames. Adrià and team solved this issue by upscaling the image through super-resolution, an algorithmic method to image resolution by extracting details from similar images in a sequence to reconstruct other frames. In their case, the researcher team applied a Residual Dense Network model to improve the image quality of faraway players. This deep learning image enhancement technique helped researchers preserve some image quality and detect the player’s faces through OpenPose thanks to the clearer images. They were then able to detect additional points of the player’s body and accurately define the upper-torso position using the points of the shoulders and hips.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

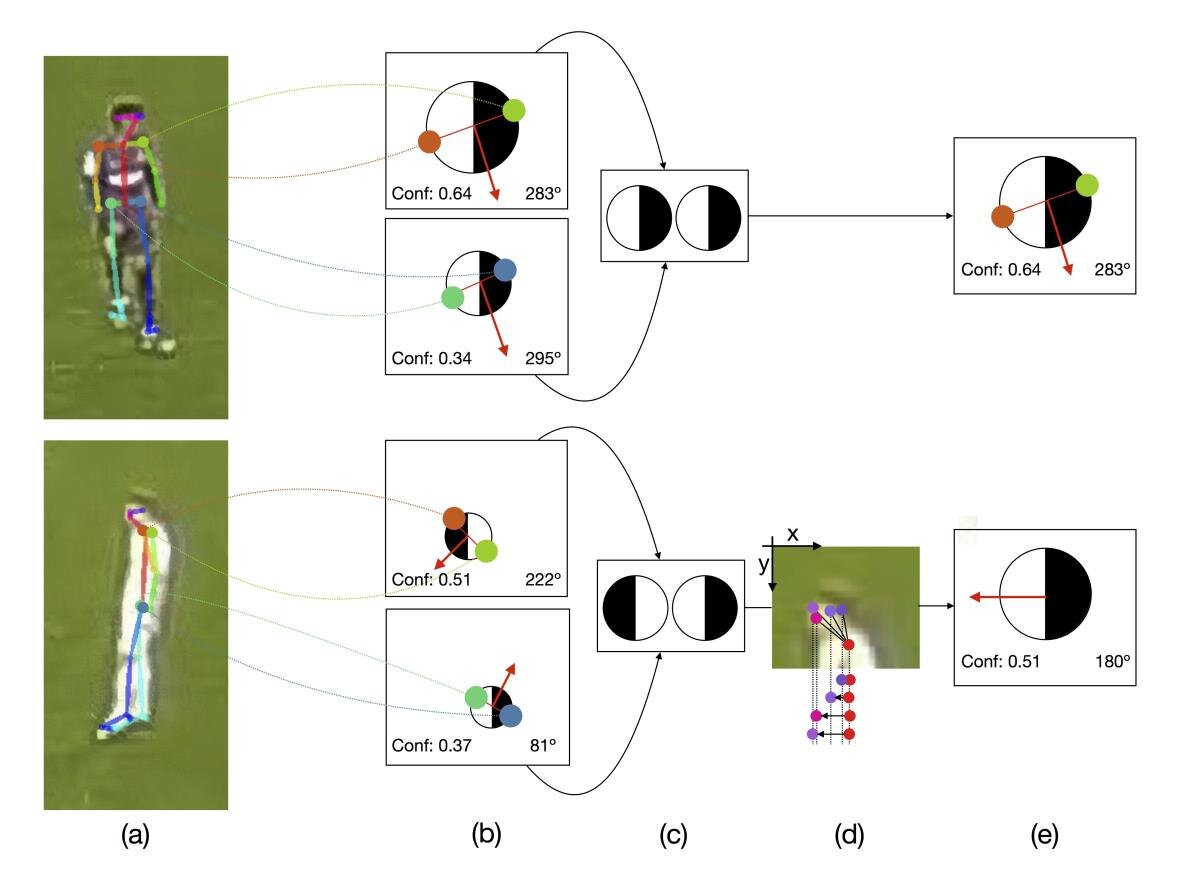

Once the issue with image quality was solved by researchers and the player’s pose data was then extracted through OpenPose, the orientation in which a player was facing was derived by using the angle of the vector extracted from the centre point of the upper-torse (shoulders and hips area). OpenPose provided the coordinates of both shoulders and both hips, indicating the position of these specific points in a player’s body relative to each other. From these 2D vectors, researchers could determine whether a player was facing right or left using the x and y axis of the shoulder and hips coordinates. For example, if the angle of the shoulders shown in OpenPose is 283 degrees with a confidence of 0.64, while the angle of the hips is 295 degrees with a confidence level of 0.34, researchers will use the shoulders’ angle to estimate the orientation of the player due to its higher confidence level. In cases where a player is standing parallel to the camera and the angles of either the hips or the shoulders are impossible to establish as they are all within the same coordinate in the frame, then researchers used the facial features (nose, eyes and ears) as a reference to a player’s orientation, using the neck as the x axis.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

This player and ball 2D information was then projected into the football pitch footage showing players from the top to see their direction. Using the four corners of the pitch, researchers could reconstruct a 2D pitch positioning that allowed them to match pixels from the footage of the match to the coordinates derived from OpenPose. Therefore, they were now able to clearly observe whether a player in the footage was going left or right as derived by their model’s pose results.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

In order to achieve the right level of accuracy in exchange for precision, researchers clustered similar angles to create a total of 24 different orientation groups (i.e. 0-15 degree, 15-30 degrees and so on), as there was not much difference in having a player face an angle of 0 degrees or 5 degrees.

2. Body Orientation Calculated From Field View Of A Player

Researchers then quantified field orientation of a player by setting the player’s field of view during a match to around 225 degrees. This value was only used as a backup value in case of everything else fails, since it was a least effective method to derive orientation as the one previously described. The player’s field of view was transformed into probability vectors with values similar to the ones with pose orientation that are based on y coordinates. For example, a right back on the side of the pitch will have its field of view reduced to about 90 degrees, as he is very unlikely to be looking outside of the pitch.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

3. Orientation Calculated From Ball Positioning

The third estimation of player orientation was related to the position of the ball on the pitch. This assumed that players are affected by their relative position in relation to the ball, where players closer to the ball are more strongly oriented towards it while the orientation of players further away from it may be less impacted by the ball position. This step of player orientation based on ball position accounts for the relative effect of ball position. Each player is not only allocated a particular angle in relation to the ball but also a specific distance to it, which is converted into probability vectors.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

Combination Of All The Three Estimates Into A Single Vector

Adrià and the research team contextualized these results by combining all three estimates into as single vector by applying different weights to each metric. For instance, they found that field of view corresponded to a very small proportion of the orientation probability than the other two metrics. The sum of all the weighted multiplications and vectors from the three estimates will correspond to the final player orientation, the final angle of the player. By following the same process for each player and drawing their orientation onto the image of the field, player movements can be tracked during the duration of the match while the remain on frame.

In terms of the accuracy of the method, this method managed to detect at least 89% of all required body parts for players through OpenPose, with the left and right orientation rate achieving a 92% accuracy rate when compared with sensor data. The initial weighting of the overall orientation became 0.5 for pose, 0.15 for field of view and <0.5 for ball position, suggesting the pose data is the highest predictor of body orientation. Also, field of view was the least accurate one with an average error of 59 degrees and could be excluded altogether. Ball orientation performs well in estimating orientation but pose orientation is a stronger predictor in relation to the degree of error. However, the combination of all three outperforms the individual estimates.

Some limitations the researchers found in their approach is the varying camera angles and video quality available by club or even within teams of the same club. For example, matches from youth teams had poor quality footage and camera angles making it impossible for OpenPose to detect players at certain times, even when on screen.

Source: Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit.

Finally, Adrià et al. suggest that video analysts could greatly benefir from this automated orientation detection capability when analyzing match footage by having directional arrows printed on the frame that facilitate the identification of cases where orientation can be critical to develop a player or a particular play. The highly visual aspect of the solution makes is very easily understood by players when presenting them with information about their body positioning during match play, for both first team and the development of youth players. This metric could also be incorporated into the calculation of the conditional probability of scoring a goal in various game situations, such as its inclusion during modeling of Expected Goals. Ultimately, these innovative advances in automatic data collection can relief many Performance Analyst from hours of manual coding of footage when tracking match events.

Citations:

Arbues-Sangüesa, A.; Haro, G.; Ballester C. & Martin A. (2019) Head, Shoulders, Hip and Ball... Hip and Ball! Using Pose Data to Leverage Football Player Orientation. Barça Sports Analytics Summit. Link to article.